In 1993, two landmark PC games were released within months of each other: id Software's Doom, and Cyan World's Myst. Doom is a fast-paced action game viewed from a first-person perspective, with detailed weapons, demons and gore; Myst is a point-and-click puzzle adventure on a creepy island.

Doom was famously implemented using computer science techniques novel for games, such as binary space partitioning to determine the visibility of objects. In contrast, Myst was technically unsophisticated - with a first version created in HyperCard - but was still lauded for having "photorealistic" graphics that demanded an entire compact disc of data.

The core reason for Myst's graphical fidelity is obvious to the millions that have seen both games: The visuals of Doom animate smoothly every few milliseconds, while the visuals of Myst are pre-rendered images that only change when the player clicks. In other words, Doom was a game with real-time rendering, which demanded orders of magnitude more computation and development effort than Myst.

Click Navigation in Myst

A slideshow of images, with a tiny cross-fade transition, gives the illusion of moving around the Myst island. The choppiness of this experience did not stop Myst from being a compelling game.

Camera movement in Doom

Doom's smooth camera movement is a hard requirement for aiming and shooting at space demons; Doom's engine, id Tech 1, was re-used for other first person games like Chex Quest.

1993's PC gaming techniques today

30 years later, the core difference between Myst and Doom's graphics reveals itself again via a more universal computing experience: digital maps on the web.There are effectively two strategies to implementing interactive, zoomable maps in 2021. These are often referred to as raster maps and vector maps, but these names mostly describe the networking parts of a map system, and hide a spectrum of possible compromise designs. The key distinction is where rasterization - the conversion of shapes to pixels - happens. Unless you are using a vector monitor, you are seeing raster images on your display right now, but this might have happened on your device CPU, GPU or even on a totally different computer.

Vector rendering

A true vector graphics application: Asteroids implemented on an oscillograph. Unless you are using one of these, rasterization is happening somewhere.

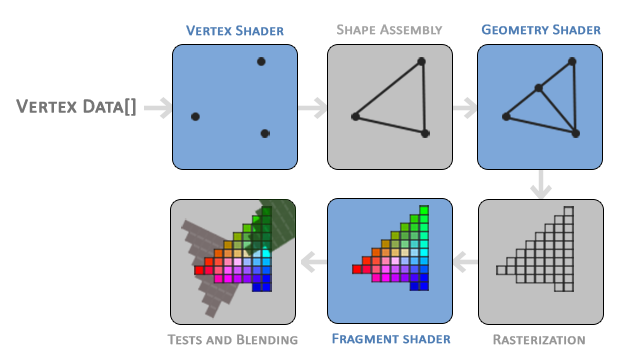

Modern Graphics Processing Unit (GPU) rendering

Vector features exist as arrays of vertices in GPU memory; these are rasterized to pixels efficiently by programmable shaders. Rasterization happens as late as possible, in parallel, without interacting with the CPU.

The "Myst" approach to map rendering

So-called raster maps are best embodied by Leaflet, an extremely popular library for displaying zoomable, pannable maps with geographic overlays. The typical flow from geodata to pixels with a Leaflet map goes something like this:

- Map data exists in vector form, in a format like GeoJSON, ESRI Shapefile, or PostGIS tables.

- At some point - either on-demand or preprocessed - this data is rasterized into image tiles via a program like MapServer or Mapnik. Images can be PNGs, JPGs, whatever - they are all the same size, and correspond to regions of Earth based on the Web Mercator projection.

- The map tiles are displayed in a web browser via

imgtags. Since images are everywhere on the web, browsers are designed to be very efficient at fetching images off-thread and animating them with GPU acceleration.

Some free or commercial services offer pre-rendered image tiles directly, like the default rendering of OpenStreetMap data on openstreetmap.org, creative cartographic styles by Stamen Design, basemap tiles for visualization by CARTO, or Wikimedia maps embedded on Wikipedia. APIs may offer hi-DPI image tiles for Retina displays; the general conventions around these image map tiles are encoded in standards like WMTS and can be consumed by other libraries like OpenLayers or Pigeon Maps, a React-centric library.

The crucial compromise of this approach is tiles only exist at discrete zoom levels with no intermediate states. If rasterization happens on a server somewhere Out There on the Internet, the architecture is not responsive enough to truly show in-between images; systems like Leaflet use clever animations to hide this fact.

Leaflet + OpenStreetMap.org tiles

A popular map style displayed on openstreetmap.org; zooming in jumps between different zoom levels; a 250 millisecond scale transition is handled by Leaflet.

Google Maps Fallback

Google Maps loads a stepped-zoom fallback map renderer initially, and then swaps to a WebGL-based real-time renderer if browser support is detected. It snaps between zooms with no animation.

Like Myst, a cross-fade animation helps achieve persistence of vision for what is actually a totally new image. "Cheating" in this way for maps can be acceptable if the blurry appearance of the map in transition is not important.

Fractional zoom levels

Are infinite, fractional zoom states possible by rounding to the nearest level? This works fine if your images are satellite photographs - the key distinction is that the features in your map are intended to be scaled in world space:

- At zoom level 5, a feature is 20 px wide.

- At zoom level 6, the feature is 40 px wide.

- At zoom level 5.5, the feature is about 30 px wide (actually a bit less because of the exponential relationship)

Infinite zoom levels

Displaying satellite imagery works just fine with img tags and continuous zoom; the images are scaled smoothly via CSS transforms before the next or previous level is loaded.

OpenSeadragon is another general purpose library for "deep zoom" images that implements fractional zooming with simple browser APIs; it works great for museum collections and satellite imagery.

But this breaks down if your intention is to keep features consistent in screen space, like cartographic map labels with an assigned font size of 20px, or schematic roads with an assigned width of 5px:

- At zoom level 5, the label is 20 px tall.

- At zoom level 6, the label is 20 px tall.

- At zoom level 5.49999, the label is about 40 px tall.

- At zoom level 5.50000, the label is about 10 px tall.

Hacking it with Leaflet

Attempting to display fractional zoom levels with pre-rasterized schematic maps means that the map is jarringly pixelated at some levels, and label sizes swing between too small and too large - coherent cartographic design becomes impossible.

The Myst approach for map display is unacceptable if you demand both screen-space rendering of features and fractional zoom levels. If you can compromise by having stepped zooms, non-realtime-rendered maps are significantly easier to implement.

The "Doom" approach to map rendering

The correct fractional-zoom approach to rendering is true real-time maps, where rasterization of map features happens Doom-style - multiple times a second, ideally 30-60. This is only practical to accomplish on GPUs, via a low-level API like OpenGL/WebGL, Direct3D or Metal.

The pinnacle of this approach for developers to reuse in web apps is the source-available Mapbox GL JS library or a fork which is still BSD-licensed. Google Maps for the web now uses its WebGL version for all browsers; there are also less widely adopted renderers like Harp.GL and Tangram JS. The flow of data is something like this:

- Geographic features are projected into Web Mercator, sliced into tiles, and stored in a vector SVG-like format, like GeoJSON or MVT protocol buffers.

- Tiles are fetched over the network and vertex data is moved to GPU memory. Polygons need to be converted into triangles, because GL-like graphics APIs only display triangles and textures. Text needs to be converted to textures or signed distance fields for map labeling.

- Programmable shaders extrude linear features into screen-space lines - see Drawing Lines is Hard - and then composite them with triangle-ized polygons and texture-ized text on screen. A powerful map engine can allow user customization at any point in this pipeline.

Google Maps WebGL

Google Maps uses WebGL for real-time rasterization, which means that text labels are always sized correctly, and features are never blurry, even when data has not finished loading for the displayed zoom level.

The web is a difficult platform for real-time rasterization

Map applications on native platforms - desktop, iOS, Android - take the real-time rendering approach, because they have direct access to powerful APIs for doing mappy things - Metal, Direct3D for drawing, Core Text and Uniscribe for displaying fonts. Apps for the web are constrained by a weak platform and also the need to be cross-browser, leading rich design tools like Figma to ship their own implementation of low level graphics APIs.How to build fast maps

Software developers and cartographers building mapping systems will describe an interactive map being fast or performant (sic) without any context as to what performance actually means. Performance, in the abstract, is used to justify building a real-time rendered system over the "obsolete" technology of pre-rendered tiles. By most quantifiable measures - like vertices rasterized per second - GL maps blow away the alternatives.

What end users of applications actually perceive as a high performance web app is low latency. A web map system should respond instantaneously to user input, zooming as soon as a wheel is scrolled, and panning perfectly in sync with mouse movements. The point here is that a non-real-time rasterized map system can be as fast or faster than a GL one, by choosing to show only discrete zoom levels, minimizing network latency, and limiting the total amount of data sent to the browser.

WebGL Map Latency at 2560x1600

MapLibre GL displaying a typical cartographic map style at full screen resolution on a 2016 MacBook Pro. Zooming is nice, with real-time label collisions, but panning the map is choppy. You can reproduce this experiment on this example page.

Leaflet map at 2560x1600

Leaflet displays chunky zoom levels - objectively worse than a real-time rasterized system - but panning the map has very low latency, because the GPU is simply moving textures around.

So which approach should I use?

It depends.

Decisions on mapping technology, and committing to tradeoffs, are wickedly cross-functional - spanning backend and frontend software engineering, graphic design, user experience and product management.

In the same way pre-rendered images were good enough to make Myst a best-selling game, non-realtime rendering of maps is good enough for some, maybe even the majority, of web map use cases today. If you're building a whizbang mapping demo with 3D flythroughs to show your company execs, a real-time-rendered system - the Doom approach - is the best choice; if you're building a site for helping people find the nearest COVID vaccine, the simplest solution is the best solution, and that usually means stepped-zoom maps.

Some good reasons to go real-time rendering:

- Your application is built around a full-screen interactive map, and smooth zooming is a core part of the experience.

- You need to display 3D geometry or rotate the map.

- You intend to display a high density of labels, and need real-time collision between them.

Some good reasons to use Leaflet-style maps:

- The map is just a part of the application, like an inset or locator map.

- The map is never zoomed, only panned or completely static.

- Chunky, stepped zooms are an acceptable user experience.